US Pay Stubs

This section describes how to build your custom OCR API to extract data from US Pay Stubs using the API Builder. A US Pay Stub is a slip or document that accompanies the paycheck and contains details on the paycheck.

Prerequisites

You’ll need at least 20 US pay stubs (images or PDFs) to train your OCR.

Define your US Pay Stub Use Case

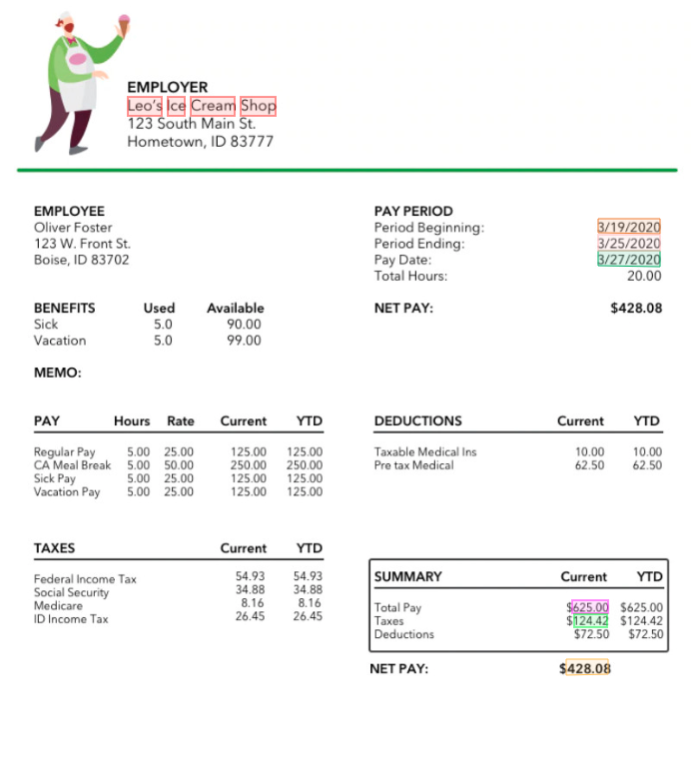

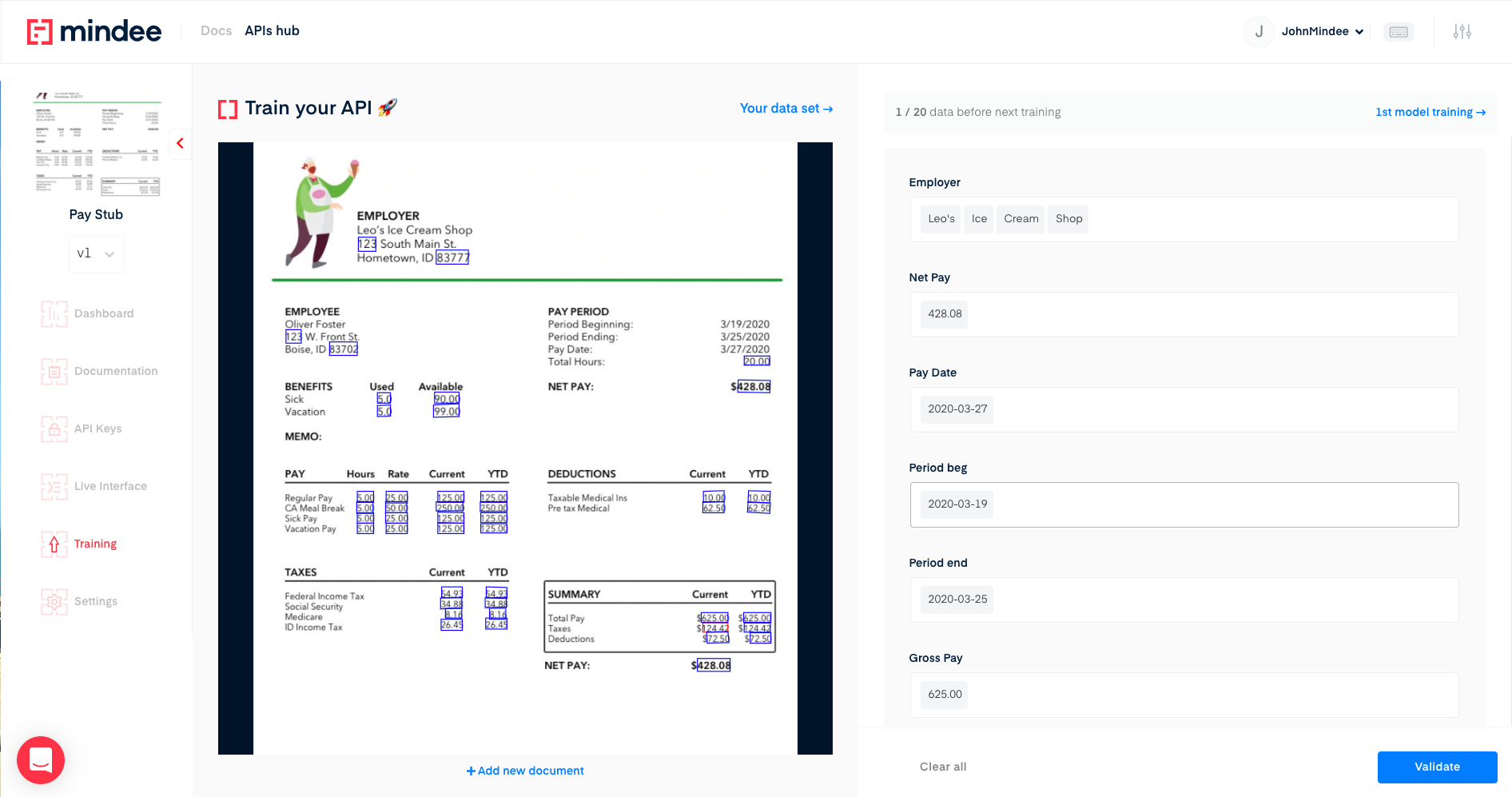

Using the US Pay Stub below, we’re going to define the fields we want to extract from it.

- Employer: The full name of the employer issuing the pay stub

- Net pay: Total net paid to the employee

- Pay date: Date of wage payment

- Period beginning: Pay stub start date

- Period ending: Pay stub end date

- Gross pay: Total gross pay before taxes and deductions

- Total tax: Total tax deducted

That’s it for this example. Feel free to add any other relevant data that fits your requirement.

Deploy your API

Once you have defined the list of fields you want to extract from your US Pay Stubs, head over to the platform and follow these steps:

-

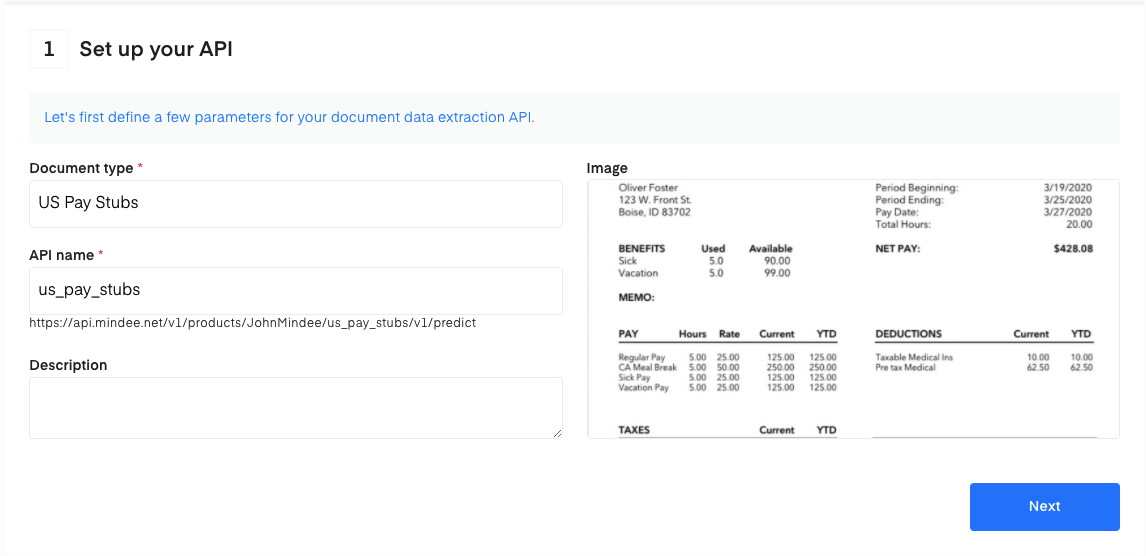

Click on the Create a new API button on the right.

-

Next, fill in the basic information about the API you want to create as seen below.

- Click on the Next button. The following page allows you to define and add your data model.

Define Your Model

There are two ways to add fields to your data model.

- Upload a JSON config file

- Manually add data

Upload a JSON Config

To add data fields using JSON config upload.

- Copy the following JSON into a file.

{

"problem_type": {

"classificator": { "features": [], "features_name": [] },

"selector": {

"features": [

{

"cfg": { "filter": { "alpha": -1, "numeric": -1 } },

"handwritten": false,

"name": "employer",

"public_name": "Employer",

"semantics": "word"

},

{

"cfg": { "filter": { "is_integer": -1 } },

"handwritten": false,

"name": "net_pay",

"public_name": "Net Pay",

"semantics": "amount"

},

{

"cfg": { "filter": { "convention": "US" } },

"handwritten": false,

"name": "pay_date",

"public_name": "Pay Date",

"semantics": "date"

},

{

"cfg": { "filter": {} },

"handwritten": false,

"name": "period_beg",

"public_name": "Period beg",

"semantics": "date"

},

{

"cfg": { "filter": { "convention": "US" } },

"handwritten": false,

"name": "period_end",

"public_name": "Period end",

"semantics": "date"

},

{

"cfg": { "filter": { "is_integer": -1 } },

"handwritten": false,

"name": "gross_pay",

"public_name": "Gross Pay",

"semantics": "amount"

},

{

"cfg": { "filter": { "is_integer": -1 } },

"handwritten": false,

"name": "total_tax",

"public_name": "Total tax ",

"semantics": "amount"

}

],

"features_name": [

"employer",

"net_pay",

"pay_date",

"period_beg",

"period_end",

"gross_pay",

"total_tax"

]

}

}

}

- Click on Upload a JSON config.

- The data model will be automatically filled.

- Click on Create API at the bottom of the screen.

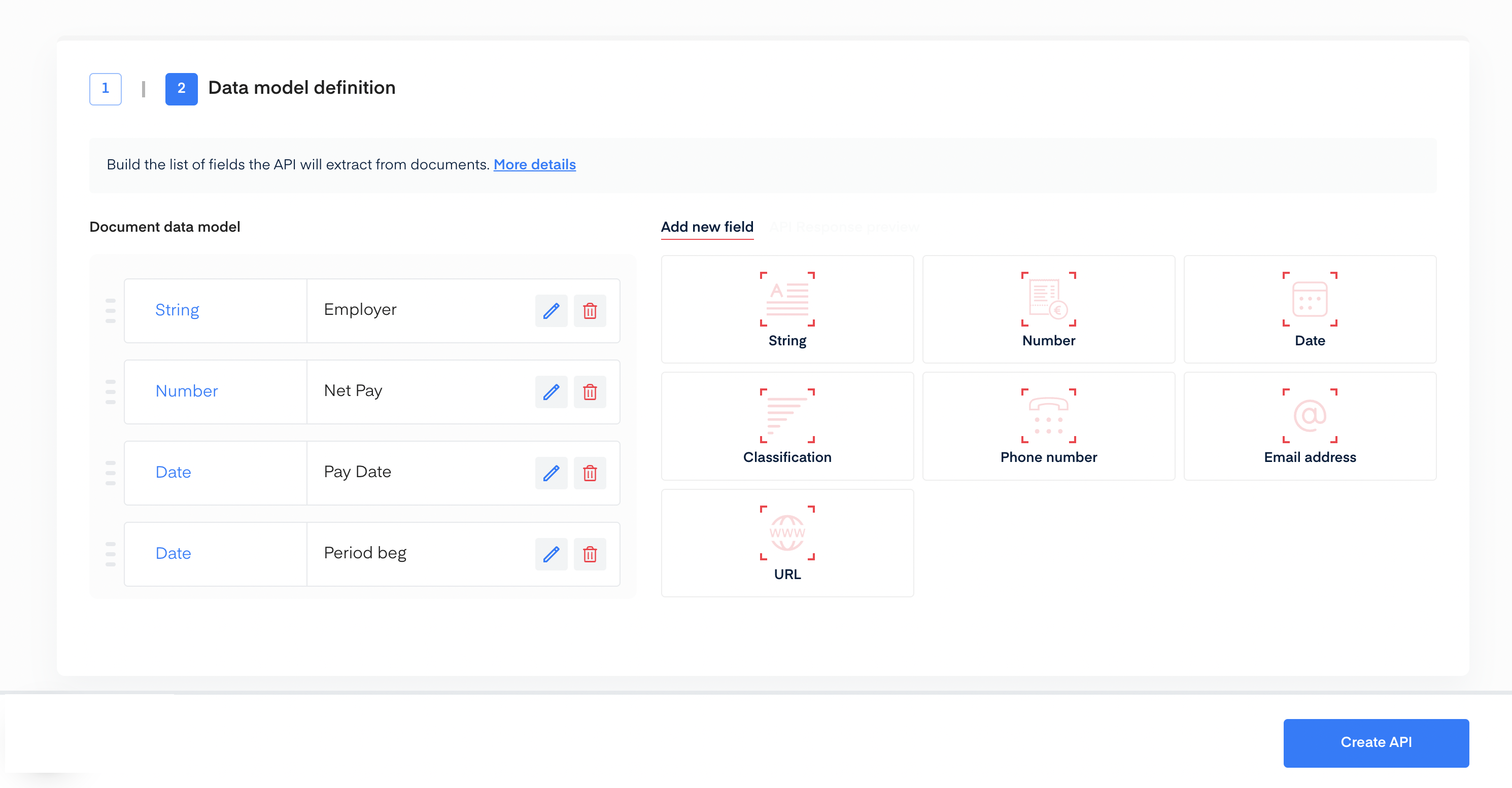

Manually Add Data

Using the interface, you can manually add each field for the data you are extracting. For this example, here are the different field configurations used:

- Employer: type String without specifications.

- Net pay: type Number without specifications.

- Pay date: type Date without specifications.

- Period beginning: type Date without specifications.

- Period ending: type Date without specifications.

- Gross pay: type Number without specifications.

- Total tax: type Number without specifications.

Once you’re done setting up your data model, click the Create API button at the bottom of the screen.

Train your US Pay Stub OCR

You’re all set! Now it's time to train your US Pay Stub deep learning model in the Training section of our API.

- Upload one file at a time or a zip bundle of many files.

- Click on the field input on the right, and the blue box on the left highlights all the corresponding field candidates in the document.

- Next, click on the validate arrow for all the field inputs.

- Once you have selected the proper box(es) for each of your fields as displayed on the right-hand side, click on the validate button located at the right-side bottom to send an annotation for the model you have created.

- Repeat this process until you have trained 20 documents to create a trained model.

To get more information about the training phase, please refer to the Getting Started tutorial.

Questions?

![]() Join our Slack

Join our Slack

Updated over 1 year ago